💸 When AWS NAT Gateway Costs Spike Out of Control (and How to Be Prepared)

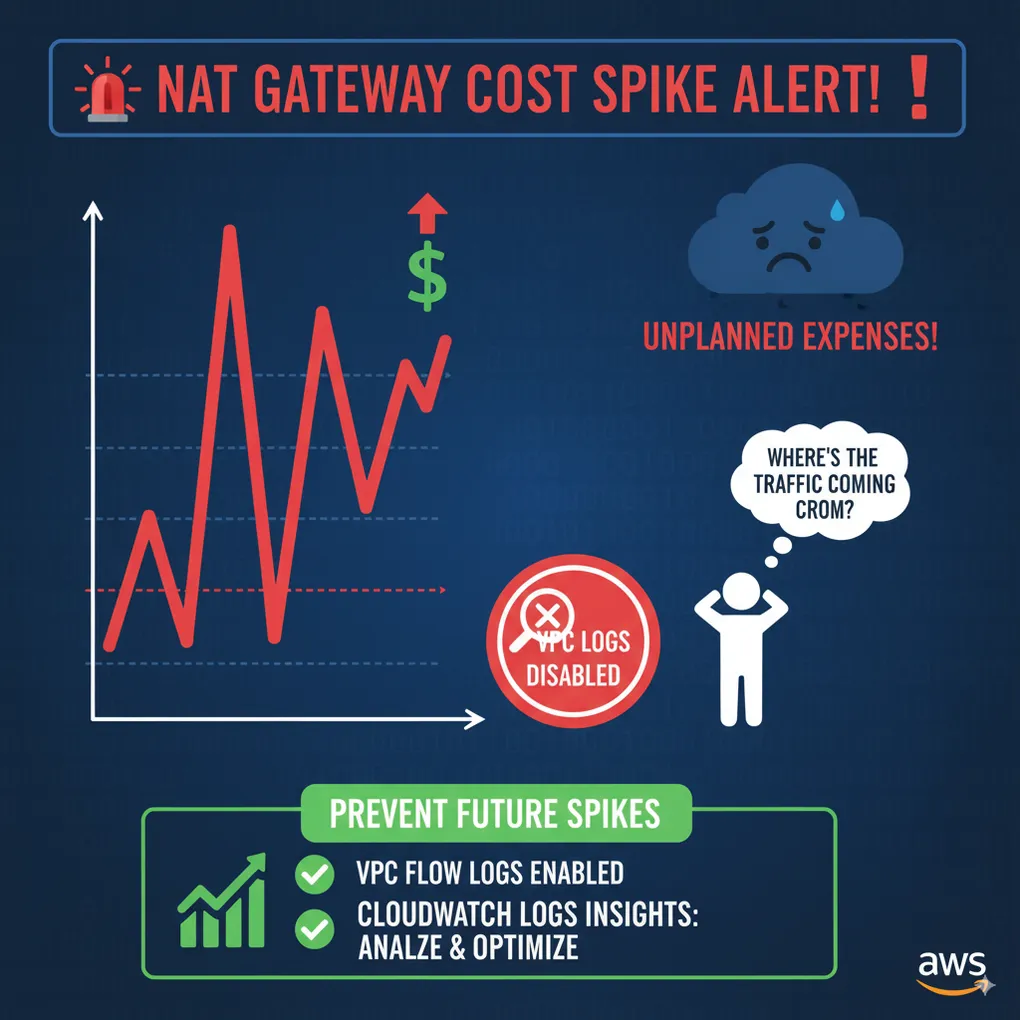

One of the most frustrating surprises in AWS is getting hit with a massive NAT Gateway bill you weren’t expecting.

It usually looks like this:

- Everything seems normal.

- Suddenly, NAT Gateway costs skyrocket overnight.

- You scramble to figure out what resource is making all these outbound calls.

- And then you realize… you never enabled VPC Flow Logs.

👉 At that point? Nothing. Even AWS Support can’t magically tell you where that traffic came from.

Why NAT Gateway Costs Can Go Insane

NAT Gateways are billed based on:

- Per-hour cost (a fixed charge per gateway)

- Per-GB data processing (every byte processed costs money)

During a spike, it’s usually the data processing that blows up your bill.

This often happens because:

- A misconfigured resource in a private subnet starts calling the internet unnecessarily.

- An application is retrying failed requests in a tight loop.

- Logging or monitoring agents are configured to send large volumes of data externally.

- Developers accidentally pull large datasets from the internet through the NAT.

Without visibility, you’re left guessing.

The Critical Lesson: Enable VPC Flow Logs

The only way to have evidence of what happened is to enable VPC Flow Logs in advance.

- Logs capture who’s talking to whom, how much traffic, and when.

- They can be sent to CloudWatch Logs or S3 for long-term analysis.

- With CloudWatch Logs Insights, you can query to find top contributors to NAT traffic.

Example: Find Top Talkers Through NAT

fields srcAddr, dstAddr, sum(bytes) as totalBytes

| filter interfaceId like /nat-gateway/

| stats sum(totalBytes) by srcAddr, dstAddr

| sort totalBytes desc

| limit 20This query shows the top source/destination pairs contributing to NAT traffic. Perfect for identifying misbehaving EC2 instances, containers, or services.

Proactive Monitoring

Don’t just enable logs — take the next step:

- Set up CloudWatch Alarms on NAT data processed metrics.

- Create budgets in AWS Cost Explorer to catch unexpected spend.

- Use Service Control Policies (SCPs) or tagging policies to prevent rogue resources from making outbound internet calls.

Common Causes You Can Eliminate

From past experience, here are frequent culprits:

- Instances in private subnets downloading OS/package updates directly from the internet (instead of using AWS Systems Manager or local mirrors).

- Misconfigured container tasks making outbound telemetry calls.

- Third-party agents (logging, monitoring, APM) sending data without rate limiting.

- Developers bypassing VPC endpoints and hitting public endpoints through NAT.

What’s Next?

Enabling logs is step one. In the next blog, I’ll cover practical tips to reduce NAT Gateway costs when traffic is legitimate — including VPC Endpoints, PrivateLink, and alternatives.

Final Thoughts

A NAT Gateway spike without VPC Flow Logs is like trying to investigate a fire with no smoke alarms installed.

✅ Always enable Flow Logs in advance. ✅ Use Logs Insights to identify top contributors. ✅ Treat NAT as a cost visibility and control problem, not just a networking one.

💡 Have you ever been hit with an unexpected NAT bill? How did you track it down? Share your story — others can learn from it.